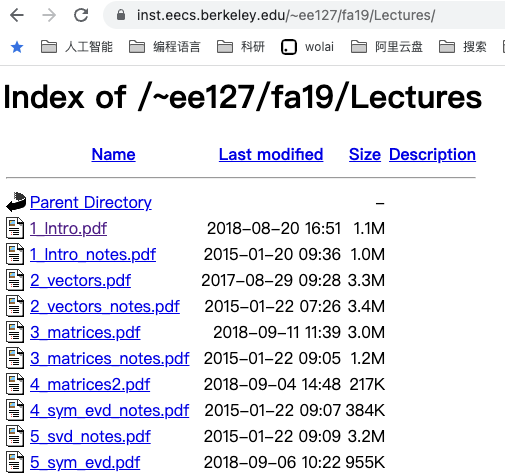

国外很多大学的课件放在一个专门的页面上,如下图所示,那么很容易通过一个爬虫批量下载下来。

思路就是识别页面中以pdf结尾的链接,然后进行下载。

单线程版本

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 import requestsfrom bs4 import BeautifulSoupurl = 'https://inst.eecs.berkeley.edu/~ee127/fa19/Lectures/' page = requests.get(url).content soup = BeautifulSoup(page, 'html.parser' ) links = soup.find_all('a' ) for link in links: href = link.get('href' ) if href.endswith('.pdf' ): file_url = url + href with open (href, 'wb' ) as f: f.write(requests.get(file_url).content)

下载25个文件,费时180秒左右。

多线程版本

对于这种国外网站,常常下载速度较慢,这种IO密集型的任务使用多线程就非常有必要了。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 from threading import Threadimport requestsfrom bs4 import BeautifulSoupdef download (url, href ): r = requests.get(url + href) with open (href, 'wb' ) as f: f.write(r.content) url = 'https://inst.eecs.berkeley.edu/~ee127/fa19/Lectures/' page = requests.get(url).content soup = BeautifulSoup(page, 'html.parser' ) links = soup.find_all('a' ) threads = [] for link in links: href = link.get('href' ) if href.endswith('.pdf' ): t = Thread(target=download, args=(url, href)) threads.append(t) t.start() for t in threads: t.join()

这时,下载同样的文件,只需12秒左右,效果还是很明显的。